Principles for Continuous Improvement: Building a Data Dashboard

“What gets measured gets managed” is an oft-repeated line in the nonprofit performance management world, and one that we at Third Sector have found holds true. That’s why we work closely with our government and community partners to ensure that “what gets managed” are long-term, meaningful outcomes that demonstrate that individuals and families are better off as a result of a publicly-funded program. We have also learned that the process of actively and collaboratively managing “what gets measured” can make a program even more successful. Working together, government agencies and their providers can use data to continuously improve services and processes and ultimately improve client and community outcomes.

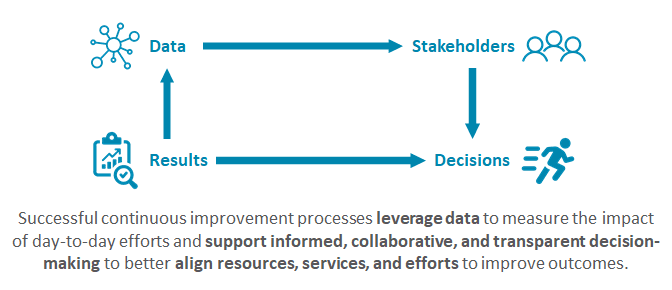

Successful continuous improvement processes leverage data to measure the impact of day-to-day efforts and support informed, collaborative, and transparent decision-making to better align resources, services, and efforts to improve outcomes.

During these uncertain times, continuous improvement processes are essential to ensuring that vulnerable communities are not left out of relief and recovery. This three-part blog series articulates the steps to creating a continuous improvement process that will help publicly-funded programs achieve desired outcomes. In this first post, we’ll explore how to build a data dashboard that can act as the basis for continuous improvement conversations. In future posts, we’ll offer lessons learned on structuring check-ins to discuss the data and determine next steps.

What will you measure?

The first step to setting up a data-driven continuous improvement process is deciding what you would like to measure. Identify a few outcome goals (e.g., “increase in wages”, “family reunification,” “stable housing”) and metrics to measure these outcomes (e.g., “change in hourly pay,” “days to family reunification,” “length of stay in a qualifying permanent housing location”). Work with your team, providers, and other community members to clearly articulate what these outcomes and metrics are, so that everyone has the same understanding of program goals. These goals will ultimately drive the direction of the program and continuous improvement efforts, so it is especially important to ensure that the outcomes you are trying to “manage to” are the ones that the community and service recipients care about and find meaningful.

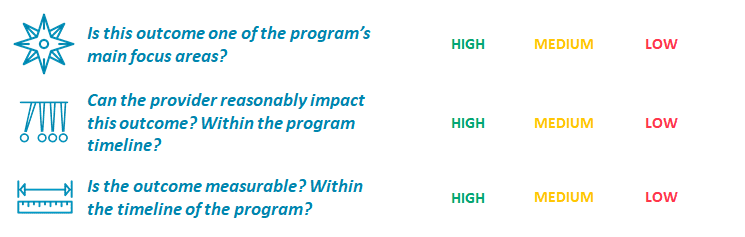

But how do you decide what to measure and focus on amidst a wealth of information and possible priorities? We recommend using the framework below, ranking each attribute on a high-, medium-, low- scale to identify top candidates. Additionally, we suggest focusing your data dashboard on three to five short- and medium-term metrics that can drive regular service improvements and are indicative of longer-term outcomes. These longer-term outcomes are important to check in on regularly, but may not change rapidly enough to inform a real-time action plan.

How will you measure it?

Next, clarify and plan a measurement strategy for the identified outcomes and metrics. Go beyond identifying data sources to articulate how you will compare performance and look at trends. You may want to examine trends in one metric over time, compare against a historical or regional baseline, or compare and contrast providers with different service models.

Be sure to disaggregate data by race, geography, and other subpopulations enrolled in the program. This information should be used to both understand the basic demographics of those enrolled in the program and to understand outcomes achievement. Is the program more or less successful in serving specific groups of people?

Historically, the gold standard of impact evaluation has been the Randomized Control Trial (RCT). While RCTs can measure the impact of service and its role in causing the outcomes achieved, this end-of-project snapshot is not useful for the regular, real-time continuous improvement conversations. We recommend keeping measurement simple and focused on key trends and comparisons, which will not only become the core components of your data dashboard, but also the meat of the continuous improvement conversations.

How will you display the analysis?

Once you’ve determined what you will measure and how, it’s important to consider how you will display this information so that it is readily accessible and useful to stakeholders informing the continuous improvement process. As you build this data dashboard or report, work with providers and other partners to determine the best visual to easily understand trends at-a-glance. After all, you want continuous improvement conversations to be about digging into the “why” behind what is driving the trends, rather than debating if there is a trend in the first place.

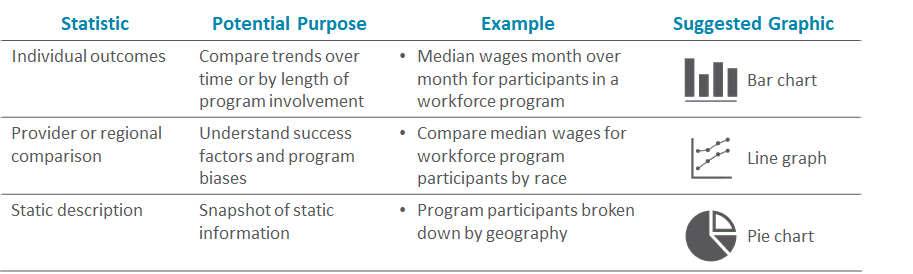

Be clear on the unit of measurement for different metrics. For example, you may want to understand how a group of individuals who entered a housing program with criminal justice histories fared at the three-, six-, twelve-month marks (this is an individual-level analysis). Or, you may be comparing two providers who take a different approach to housing similar individuals to see who has more short-term and long-term success (a provider comparison). Or, you may be comparing whether providers were able to increase stable housing rates after a local housing subsidy was introduced (a system assessment).

Consider when visuals, such as simple bar graphs, better demonstrate trends than raw numbers. As a rule of thumb, bar charts are great for comparing a single metric over time (median wages month over month for participants in a statewide workforce program); line graphs help you compare multiple providers, regions, or participant populations on a single metric overtime (median wages month over month for participants in a statewide workforce program by provider or by region or by race of participant); pie charts are best for static descriptive statistics (program participants by geography.)

Performance-Based Contracting: ECEAP Case Study

Third Sector is working with Washington State’s Department of Children, Youth, and Families’ Office of Innovation, Alignment and Accountability (OIAA) to help select teams across the agency identify and incorporate key quality and outcome measures in contracts for client services. With intensive support from Third Sector and OIAA staff, the goal of the project is to use provider performance data to understand what drives outcomes and support continuous improvement.

One of the first teams involved in the project was the Early Childhood Education and Assistance Program (ECEAP). ECEAP is Washington's pre-kindergarten program that prepares 3- and 4-year-old children from families furthest from opportunity for success in school and in life. ECEAP brought an unwavering commitment to racial equity and a robust pre-existing strengths-based continuous quality improvement structure into the process.

What will you measure? To start, ECEAP worked with their contracted service providers to create a logic model that outlines the core components of the model and measures of program success. ECEAP then went through a process to select measures using an equity lens for criteria like: Does research or data analysis show the contracted services influence the measure? Can data on the measure be tracked regularly and accurately? Does the measure support the advancement racial equity? Do contractors have sufficient influence on the client outcome measure to be held accountable for it in contracts? Are stakeholders aligned on the importance of the measure? Is family voice meaningfully incorporated into the process?

How will you measure it? The next step was to compare current and historical provider performance for each measure selected and collaborate with stakeholders (e.g. providers, families, etc.) to develop metrics and targets. Data on the metrics is regularly shared and analyzed with providers through their existing continuous improvement process to ensure that data is understood within the context of each provider’s program. The ECEAP team and providers now use a more detailed selection of targeted provider performance data with a broad range of other program data to improve services, for instance, increasing vision and hearing screening completion rates in order to improve a child’s capacity to learn.

How will you display the analysis? As one way to support ongoing data sharing and analysis, ECEAP is considering the further development of data dashboards, or visual data displays for several metrics to better support providers’ CQI processes in ECEAP comprehensive focus areas of preschool education, child health, and family engagement. In the meantime, ECEAP continues to share and learn from the data with its providers via Excel tables and reports generated through their integrated data management system.

In answering all three of these questions and putting together your data dashboard, usefulness should be your guide. This data dashboard is your first touch-point for “what gets managed” and will serve as the basis of regular continuous improvement conversations, so it should reflect what government, providers, and other community partners agree will be most helpful for informing program changes in pursuit of long-term outcomes goals. Moreover, don’t expect to get it right the first time. Continuous improvement conversations will illuminate new data points and comparisons that would be helpful to see, as well as those that are not as relevant for regular review. Expect this to be a living document that evolves overtime in service of the ongoing conversation.

For more data dashboard examples or to explore how a data dashboard might support your program’s goals, don’t hesitate to reach out to us. And stay tuned for our next blog in this series, which will highlight examples of how to structure a collaborative and productive multi-stakeholder conversation that drives continuous improvement.